Posted 28 May

The ethics of AI in the health space.

Would you trust Doctor Chatbot with your health advice? The first Ethos event of 2025 explored the ethics of AI (Artificial Intelligence) chatbots in the health space. Ethos Community of Practice aims to foster a culture of ethical questioning amongst researchers and the public.

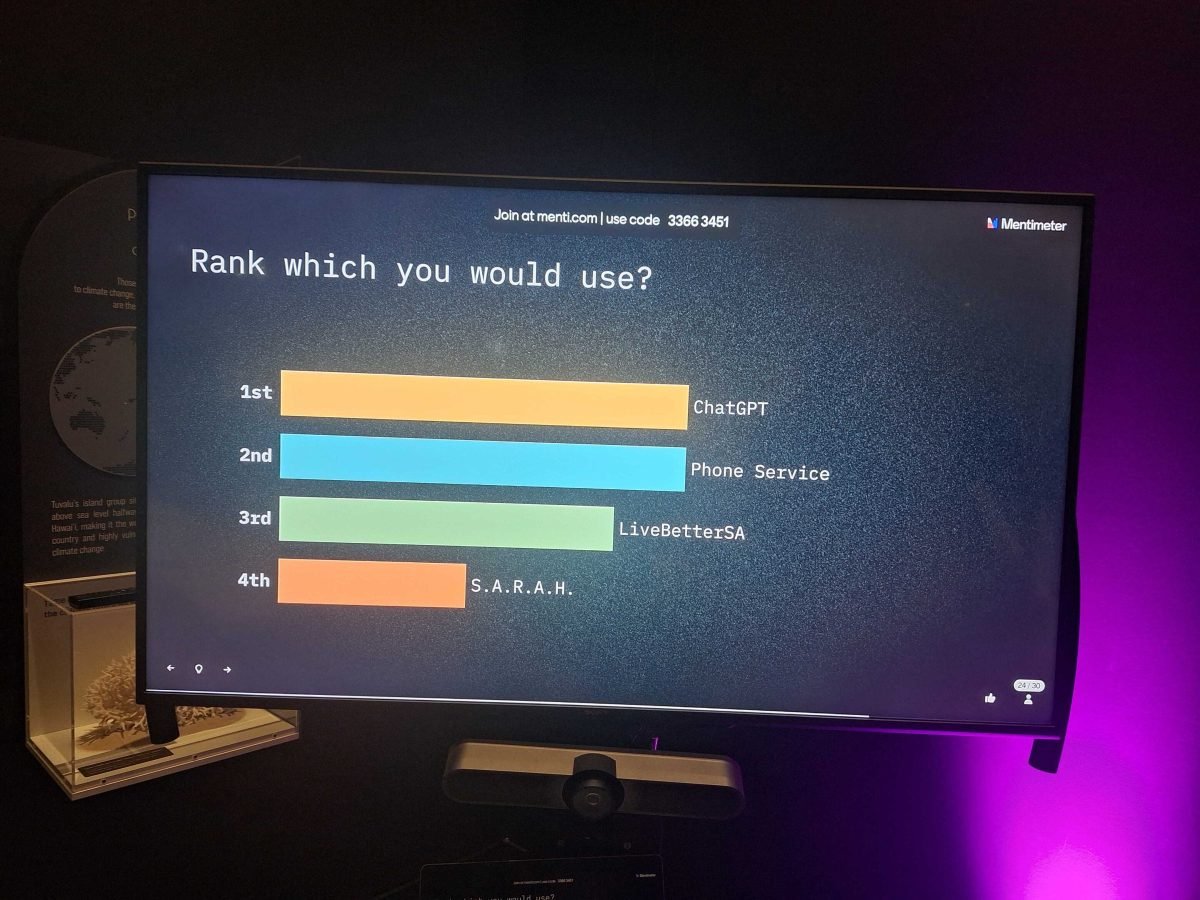

Dr Aaron Davis and Andrew Lymn-Penning began with a Mentimeter survey to gauge participants perceptions and concerns around Large Language Model AI chatbots like ChatGPT. Aaron also referenced research from the Harvard Business Review on how people are actually using AI. Pertinently, he noted that “therapy and companionship” in 2025 had replaced 2024’s “generating new ideas” as the number one use case for AI. This suggests that the link between AI and mental health advice in particular is only growing stronger.

Following these provocations Clare Peddie facilitated a discussion between Aaron, Andrew and Dr. Rebecca Marrone about the ethics around trusting AI with our medical data and health advice, particularly in the youth mental health space. Additionally, participants explored questions around the reliability and biases of AI and who bears accountability when it is wrong.

To illustrate the tensions at play when using chatbots for health advice, the panel discussed three different chatbots.

Firstly, Aaron discussed S.A.R.A.H. a chatbot programmed by the WHO to give users trustworthy, WHO-endorsed, accurate health information. However, this “tightly controlled walled garden” limits S.A.R.A.H. to essentially “cut and paste… text out of a WHO report”. Its stringent accuracy comes at the cost of detail, customisation, complex language, and a conversational style.

Secondly, Aaron referenced the more open, beta-version of the LiveBetterSA chatbot. It has been fed dietary guidelines from reputable Australian health and academic sources, but also has “access to the internet in a limited capacity”. Additionally, Aaron said that your personal data is “locked down… on Australian servers” and is not harvested. It provides more customised advice than S.A.R.A.H., whilst still having controls around source reputability and personal data privacy.

Finally, there is the open source ChatGPT with access to most of the internet and no data privacy guarantees. Andrew noted this type of AI runs a statistical model to find the most “generally accepted answer” to a question. ChatGPT’s superior usability, conversational style and customised answers come at the potential cost of unreliable advice, personal data breaches, and “unknown” long-term risks, according to Aaron. Are we comfortable with accepting these long-term risks in exchange for immediate, ‘human-like’ responses to our dietary and mental health questions?

Can we trust the answers AI gives us? One participant questioned who has written the hidden algorithms and constraints behind AI. Do these tools simply reinforce and amplify systematic biases in the AI’s answers? For example, Andrew pointed to an AI struggling to “generate an image of a female CEO… because there weren’t many photos of female CEOs.” Consequently, Aaron emphasised the need to broaden AI development and research beyond computer science and “randomised control trials” that just focus on binaries. Research should also include “onto-epistemological approaches” – that is, viewing scenarios holistically, common in Aboriginal knowledges – to help ensure implicit systemic biases in AI are exposed.

So AI gets answers wrong, whether through implicit biases or outright ‘hallucinations’. For example, Andrew referenced a chatbot recommending a beef recipe to a vegetarian. This is fairly harmless, but the stakes are higher if you are asking AI whether a mole might be cancerous. Aaron noted that we place our trust in the experience and knowledge of medical professionals for health questions and have a certain acceptance that they will get it wrong sometimes. If we transfer some of that trust to AI, who do we hold accountable when it is wrong?

One participant said that their daughter had been asking ChatGPT for advice and support about how to handle a conflict with a friend at school. Discussion then revolved around the growing phenomenon of young people using ChatGPT for mental health support. Rebecca said that this interaction with an “open mental health chat bot” can carry “an insane amount of inherent risk”.

Accordingly, one participant noted that AI shouldn’t be used without a specific purpose, but instead be “use[d] with intention”. For example, Rebecca referenced her work with schools, where she is often asked to design mental health chatbots for students. Instead, she worked with stakeholders to focus on the core issue, which seemed to be a lack of resiliency amongst Grade 10-11 students. Her team can then work with teachers and students to “develop AI to support resiliency”. This AI would have strong safeguards, such as clear “trigger words” that – when typed by a student – can bring in a “human in the loop”, such as a counselor. This suggests that there is cause for optimism for the role of AI in improving youth resiliency.

The Ethos Community of Practice is made possible with funding through the Deputy Vice-Chancellor of Research and Enterprise. The previous Ethos forum investigated the the ethics of health and safety in the workplace. We hold Ethos events regularly at MOD. Check out our events page for more details!

Samuel Wearne was the rapporteur for this forum and is a moderator at MOD.